My Projects | Overview

Over the past years, I have led and contributed to a variety of projects, some simpler, others more complex. These range from in-house prototypes designed to enhance user experience in radiology, to academic and R&D projects like vision-based robot swarms and agent-based models. I take pride in contributing to projects that are exciting, interdisciplinary, and technically challenging, whether in industry or academia. Most of the projects below were independently managed, and through them I’ve learned that precise, thoughtful planning creates the foundation for creative experimentation.

reFrame

Evidence-based mental reframing webapp using LLMs and methods from positive psychology.

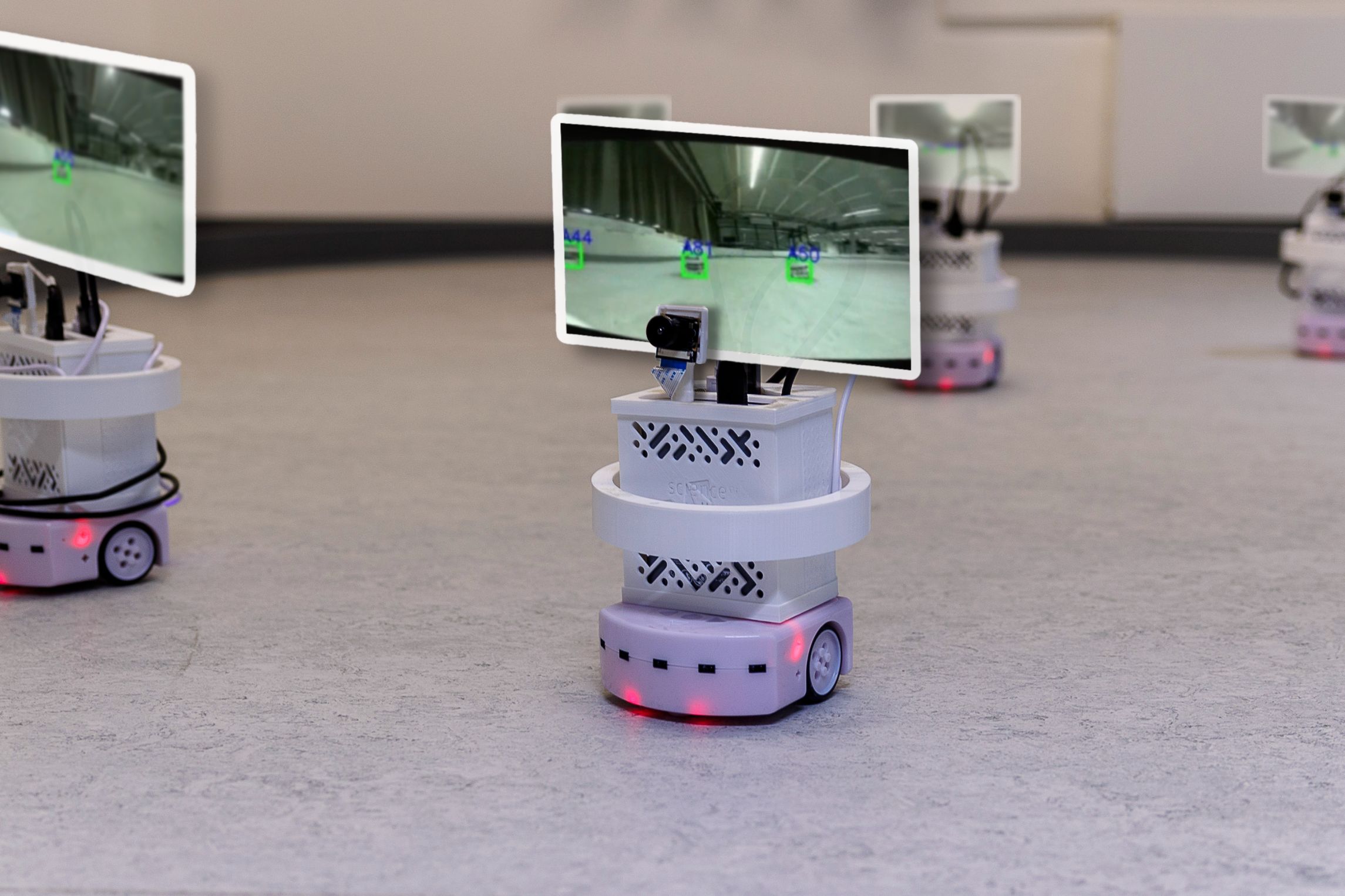

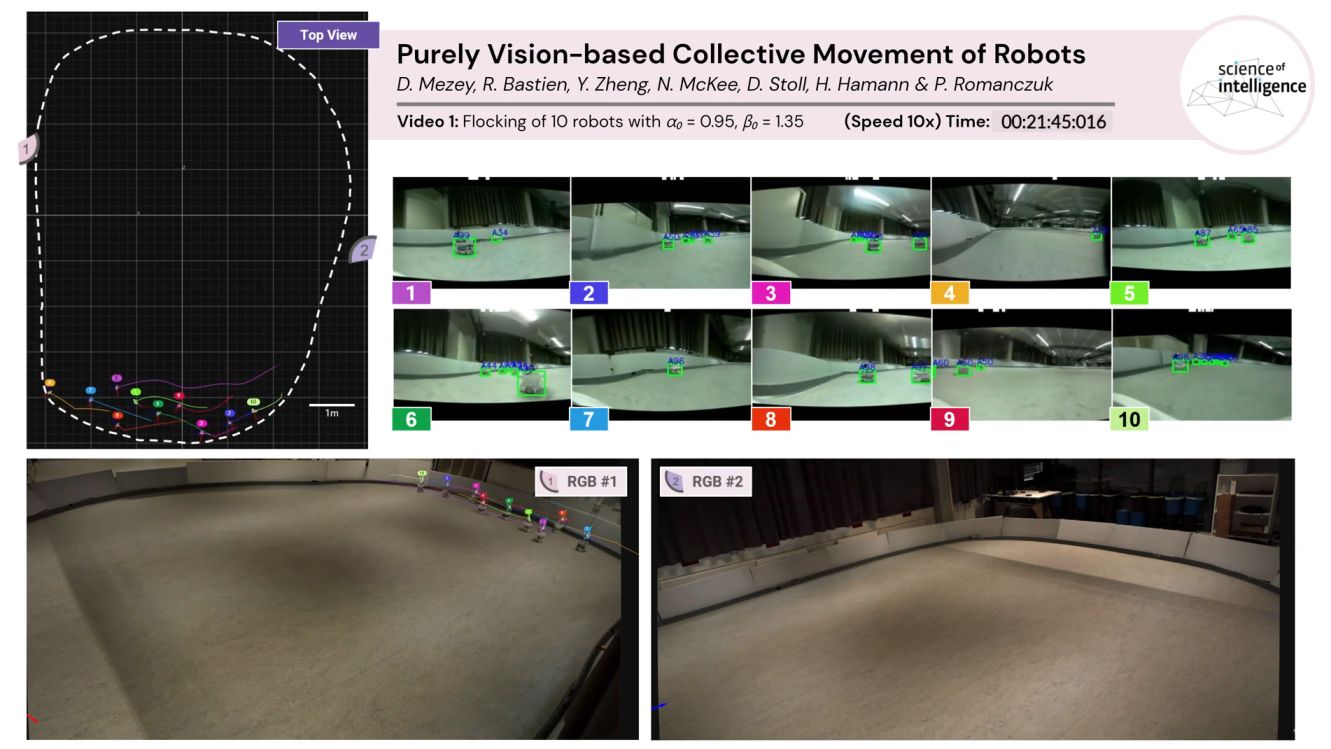

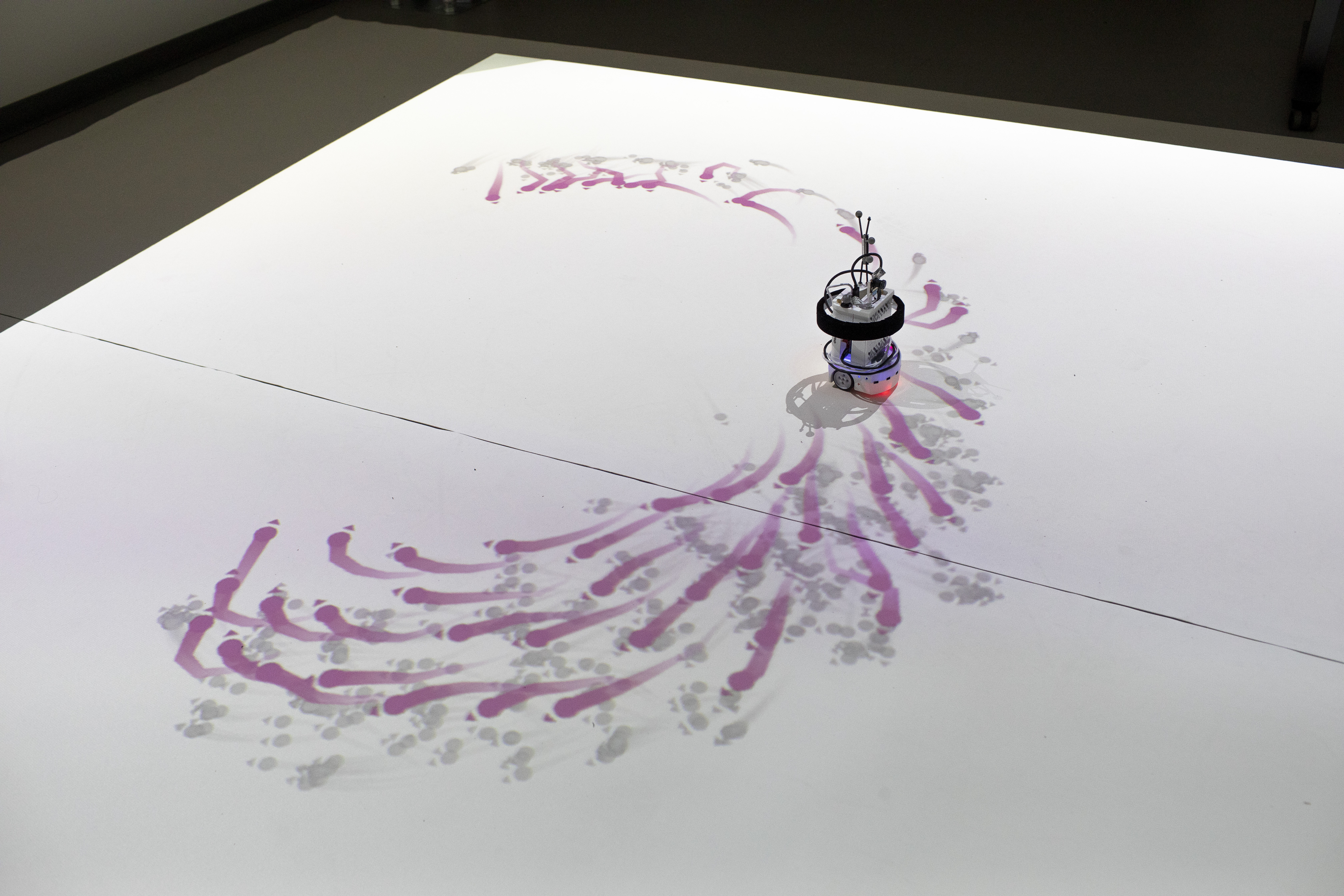

Vision-based Swarm

A flocking robot swarm using camera only.

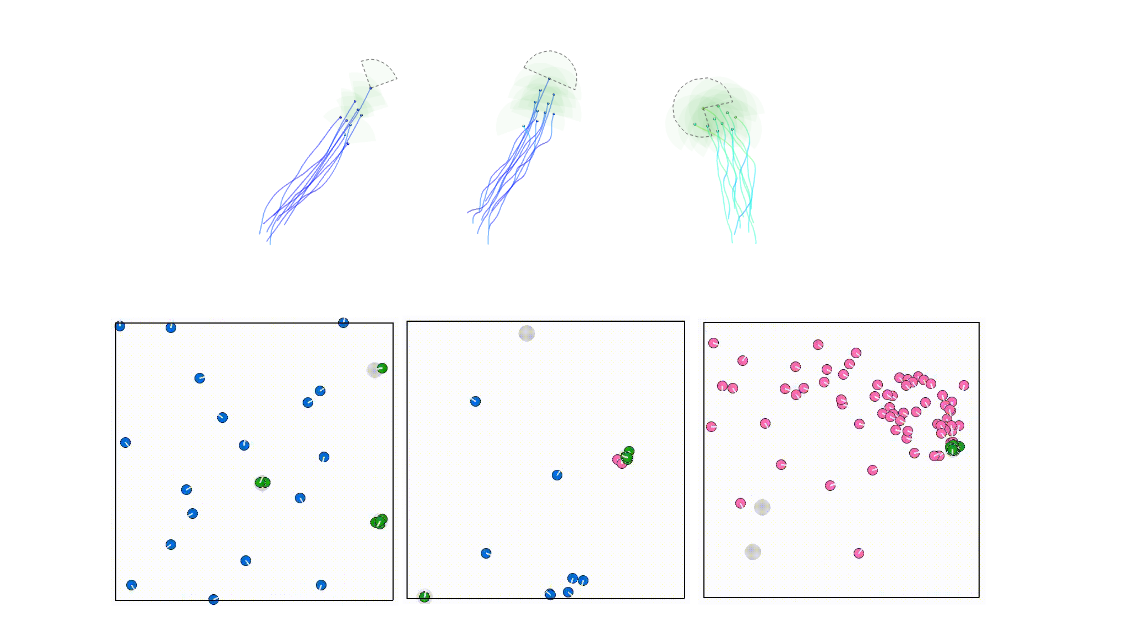

Agent-based Modeling

Perception-driven modeling projects.

Collective Foraging

Studying sociality in simulated and human groups.

CoBeXR

A spatial augmented reality system.

Human | Swarm

A human-swarm interaction study.

ModCollBehavior25

A university course I designed and organized.

Neuroimaging

My work with 2-photon microscopes.

Medical Tech

Software prototyping for improved radiology.

reFrame | Cognitive Reframing Webapp

Challenging unhelpful beliefs with evidence-based methods

What we tell ourselves influences every aspect of our well-being.[1] Cognitive reframing is one of the most powerful skills[2] that can help us regulate our emotions by changing negative thought patterns. reFrame is a tool for learning this skill. Just write a short story about an event that still keeps bothering you. reFrame helps you identify unhelpful beliefs, rate their impact, and practice generating kinder, testable alternatives. To do so, we use techniques anchored in and tested by positive psychology.

The reFrame process is simple: First, you write a short first-person story describing what happened and how it made you feel. Next, the app breaks down your story into its main components—events, feelings, and beliefs. Then, using the CAVE and ABCD methods, you identify and challenge unhelpful beliefs, rating their impact. Finally, you rewrite your story into a more balanced and helpful framing, practicing cognitive reframing step by step.